Storage Policy-Based Management (SPBM) in vSAN

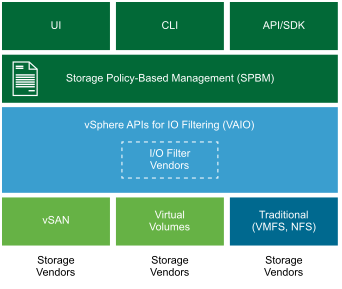

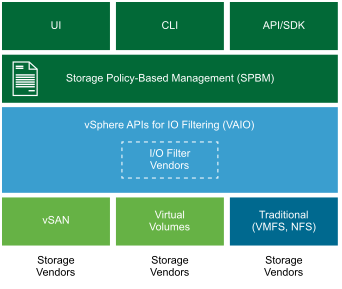

Storage Policy Based Management (SPBM) is a major element of your software-defined storage environment. It is a storage policy framework that provides a single unified control panel across a broad range of data services and storage solutions.

We all know traditional storage solutions normally use a LUN or a volume which is created from a RAID to provide a specific level of performance and availability. The challenge with this model is each LUN or volume is limited to providing one level of service regardless of the workloads that it contains. Due this we have to create multiple LUNs or volumes to provide the required storage services for different workloads. Also it is very difficult to maintain many LUNs or volumes .Also it is a manual process to manage Workloads and storage deployment in traditional storage environments , it is time consuming and high possibilities to occur human error.

By using Storage Policy-Based Management (SPBM) we achieve accurate control of storage services. Like other storage solutions, vSAN provides services such as availability levels, capacity consumption, and stripe widths for performance. A storage policy contains one or more rules that define service levels.

As an abstraction layer, SPBM abstracts storage services delivered by Virtual Volumes, vSAN, I/O filters, or other storage entities.Multiple partners and vendors can provide Virtual Volumes, vSAN, or I/O filters support. Rather than integrating with each individual vendor or type of storage and data service, SPBM provides a universal framework for many types of storage entities.

SPBM offers the following mechanisms:

-

Advertisement of storage capabilities and data services that storage arrays and other entities, such as I/O filters, offer.

-

Bidirectional communications between ESXi and vCenter Server on one side, and storage arrays and entities on the other.

-

Virtual machine provisioning based on VM storage policies.

We have to use vSphere Web Client for creating and managing storage policies . Policies can be assigned to virtual machines and individual objects such as a virtual disk. Storage policies are easily changed or reassigned if application requirements change. These modifications are performed without downtime and the need to migrate virtual machines from one datastore to another. SPBM makes it possible to assign and modify service levels with precision on a per-virtual machine basis.

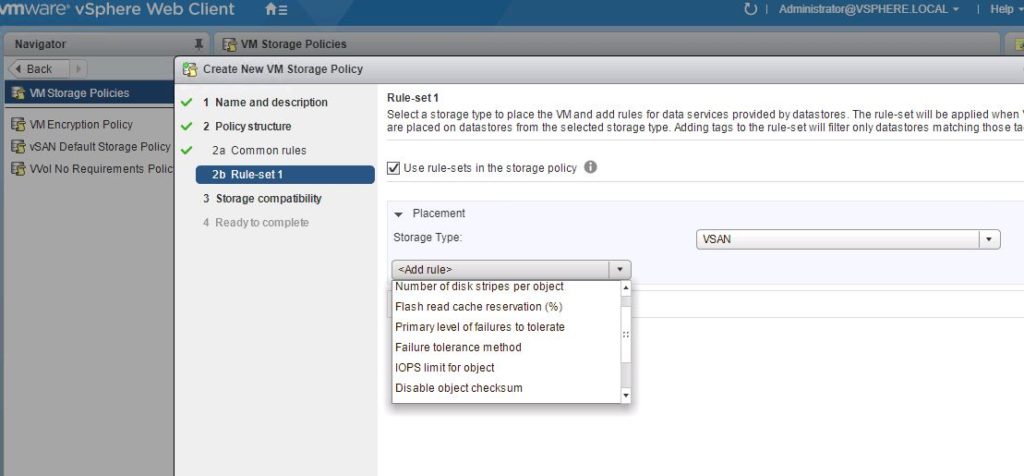

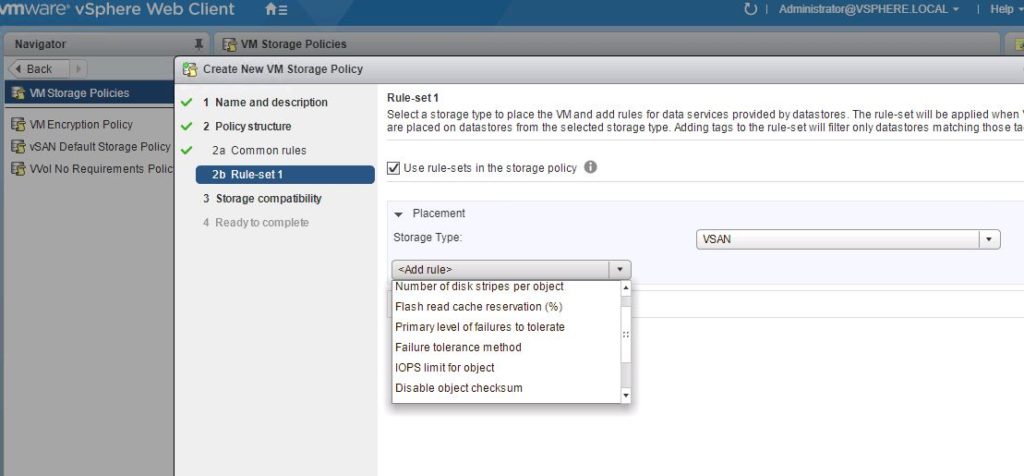

To be deployed in the vSAN cluster, the VM must be bound to a VM Storage Policy. This policy enables to configure per VM the following settings:

- IOPS limit for object: This setting provides quality of service feature that limits the number IOPS an object may consume. IOPS limits are enabled and applied via a policy setting. The setting can be used to ensure that a particular virtual machine does consume more than its fair share of resources or negatively impact performance of the cluster as a whole

- Number of disk stripes per object: This setting defines the number of stripes for a VM object such as the VMDK ,default value is set to 1. If you choose for example two stripes, the VMDK is stripped across two physical disks. Change this value only if you have performance issues and you have identified that it’s coming from a lack of IOPS on the physical disk.

- Failures to tolerate (FTT): The Failures To Tolerate (FTT) feature allows you to set redundancy in a vSAN cluster. By default, FTT is set to 1, which implies that the cluster is designed to tolerate a 1-node failure without any data loss. A higher level of redundancy can be set to protect from multiple nodes failing concurrently, thereby ensuring a higher degree of cluster reliability and uptime. However, this comes at the expense of maintaining multiple copies of data and thereby impacts the number of writes needed to complete one transaction. Moreover, a larger cluster size is required for a higher degree of redundancy; the minimum number of cluster nodes required is 2 × FTT + 1.

Note:Best practice is FTT may be set higher than 1 for higher levels of redundancy in the system and for surviving multiple host failures. Higher FTT impacts performance because multiple copies of data must be ensured on write operations.

- Failure tolerance method: This setting helps to specify either RAID-1 or RAID5/6. The RAID system works in the network and spread across the vSAN nodes ,it works in conjunction of FTT. If I assume you set the number of FTT to 1, the RAID1 configuration requires at least three nodes and the RAID 5 requires 4 nodes (We will go deeper in the next section about this aspect).

- Flash read cache reservation (%): This setting is used in hybrid vSAN configuration (SSD + HDD). It defines the amount of flash storage capacity reserved for read IO of the storage object like VMDK.

- Disable object checksum: vSAN provides a system to check if an object is corrupted to resolve automatically the issue. By default, this verification is made once a year , you may disable this with this setting , but it is n0t recommenced one .

- Force provisioning: VM Storage Policy verifies that the datastore is compliant with rules defined in the policy. If the datastore is not compliant, the VM is not deployed. If you choose to enable Force provisioning, even if the policy is not compliant with the datastore, the VM is deployed.

- Object space reservation: By default, the VMDK is deployed in thin provisioning in vSAN datastore because this value is set to 0%. If you change this value to 100%, the VMDK is deployed in thick provisioning. If you set the value to 50%, half of the VMDK capacity is reserved. If deduplication is enabled, you can set this value only to 0% or 100% but not between.

Note :- Deduplication is available only for All Flash Configuration