In my Previous Post I have explained how Containers and differ from Physical and Virtual Infrastructure. Also I mentioned Docker in the post but not shared more details because I do not want you to get confused with container and Docker.

This post I will explain about Docker,What is Docker? Docker Components, how Containers connected to Docker etc and more posts will be sharing soon which help to you do start playing with containers in your Lab .

Docker is an open source platform, which used to package, distribute and run applications. Docker provides an easy and efficient way to encapsulate applications from infrastructure to run as a single Docker image, which shared through a central, shared Docker registry. The Docker image used to launch a Docker container, which makes the contained application available from the host where the container is running.

In simple words Docker is a containerization platform, which is OS-level virtualization method used to deploy and run distributed application and all its dependencies together in the form of a Docker container. Docker platform remove the hypervisor layer from your Hardware, It run directly on top of bare metal Operating system. By using Docker Platform, you can multiple isolated applications or services run on a single host, access the same OS kernel, and ensure that application works seamlessly in any environment.

Containers can run on any bare-metal system with supported Linux, Windows, Mac and Cloud instances; it can run on a virtual machines deployed on any hypervisor as well.

For developers it might be easy to understand the concept of Docker easily but for a system administers it may difficult .Don‘t worry here I will explain the components of the Docker and how it is used .

Docker is available in two editions:

Docker Community Edition (CE) is ideal for individual developers and small teams looking to get started with Docker and experimenting with container-based apps.

Docker Enterprise Edition (EE) is designed for enterprise development and IT teams who build, ship, and run business critical applications in production at scale.

Docker Components

What is Docker Engine?

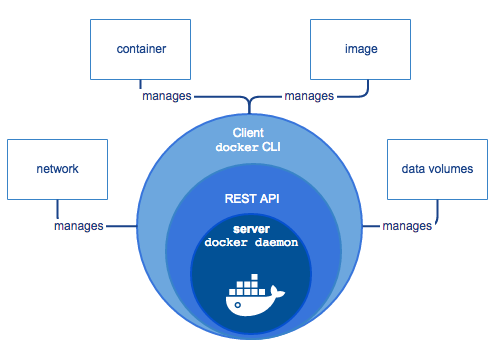

Docker Engine is the core of the Docker system; it is the application installed on the host machine. This Engine is a Client-server application with below components.

The CLI uses the Docker REST API to control or interact with the Docker daemon through scripting or direct CLI commands. Many other Docker applications use the underlying API and CLI.

The daemon creates and manages Docker objects, such as images, containers, networks, and volumes

Docker architecture

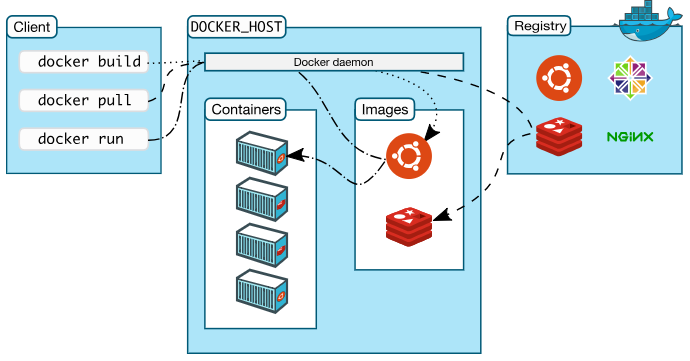

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface.

Note: – Docker engine and Architecture information is from Docker Documentation

Docker daemon

The Docker daemon (dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes. A daemon can also communicate with other daemons to manage Docker services.

Docker Client

Docker client key component which used by many Docker users interact with Docker. When you run the docker commands, the client sends these commands to dockerd, which carries them out. The docker command uses the Docker API also Docker client can communicate with more than one daemon.

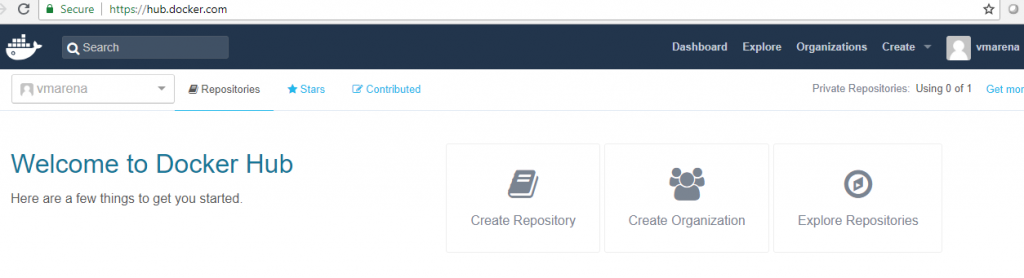

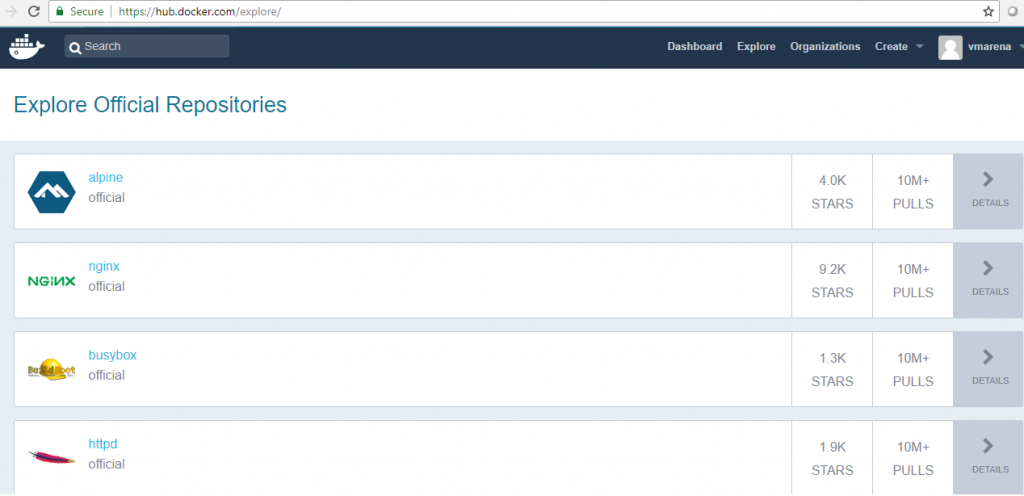

Docker registry is the place where Docker images are stored it can be a Public registry or Local registry. Docker Hub and Docker Cloud are public registries that is available for everyone and other option is create your own private registry. Docker is configured to look for images on Docker Hub by default and If you use Docker Datacenter (DDC), it includes Docker Trusted Registry (DTR).

How Docker Registry Works?

When you use the docker pull or docker run commands, the required images pulled from your configured registry. When you use the docker push command, your image is pushed to your configured registry.

Docker store allows you to buy and sell Docker images or distribute them for free.

Also you have option to buy a Docker image containing an application or service from a software vendor and use the image to deploy the application into your testing, staging, and production environments. You can upgrade the application by pulling the new version of the image and redeploying the containers.

Docker Environment

Docker Environment is combination of Docker Engine and Docker Objects, I have explained about Docker engine and some objects now understand the Objects , Docker Objects are images, containers, networks, volumes, plugins .

IMAGES

An image is a read-only template with instructions for creating a Docker container. You can create an image with additional customization from a base image or use those created by others and published in a registry.

Docker uses a smart layered file system, where the base layer is read-only and top layer is writable. When you try to write to a base layer, a copy is created in the top layer, and the base layer remains unchanged. This base layer can be shared since it is a read-only and never changes.

For example, you may build an image, which based on the Centos image, but installs the Web server and your application, as well as the configuration details needed to make your application run.

How to build Your Own Image

To build your own image, Create a Dockerfile with a simple syntax for defining the steps needed to create the image and run it. Each instruction in a Dockerfile creates a layer in the image. When you change the Dockerfile and rebuild the image, only those layers that have changed are rebuilt and this makes images so lightweight, small, and fast.

CONTAINERS

In simple words container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

By default, a container is relatively well isolated from other containers and its host machine. You can control how isolated a container’s network, storage, or other underlying subsystems are from other containers or from the host machine.

A container is defined by its image as well as any configuration options you provide to it when you create or start it. When a container is removed, any changes to its state that are not stored in persistent storage disappear.

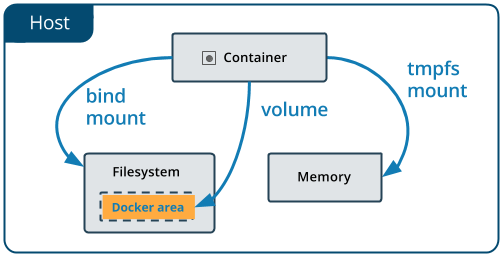

Volumes

Volumes are the preferred mechanism for persisting data generated by and used by Docker containers. While bind mounts are dependent on the directory structure of the host machine, volumes are completely managed by Docker.

Advantages of Volume over bind mounts

In addition, volumes are often a better choice than persisting data in a container’s writable layer, because a volume does not increase the size of the containers using it, and the volume’s contents exist outside the lifecycle of a given container.

If your container generates non-persistent state data, consider using a tmpfs mount to avoid storing the data anywhere permanently, and to increase the container’s performance by avoiding writing into the container’s writable layer.

Volumes use rprivate bind propagation, and bind propagation is not configurable for volumes.

One of the reasons Docker containers and services are so powerful is that you can connect them together, or connect them to non-Docker workloads. Docker containers and services do not even need to be aware that they are deployed on Docker, or whether their peers are also Docker workloads or not. Whether your Docker hosts run Linux, Windows, or a mix of the two, you can use Docker to manage them in a platform-agnostic way.

Bind mounts

Bind mounts have been around since the early days of Docker. Bind mounts have limited functionality compared to volumes. When you use a bind mount, a file or directory on the host machine is mounted into a container. The file or directory is referenced by its full or relative path on the host machine. By contrast, when you use a volume, a new directory is created within Docker’s storage directory on the host machine, and Docker manages that directory’s contents.

The file or directory does not need to exist on the Docker host already. It is created on demand if it does not yet exist. Bind mounts are very performant, but they rely on the host machine’s filesystem having a specific directory structure available. If you are developing new Docker applications, consider using named volumes instead. You can’t use Docker CLI commands to directly manage bind mounts.

Network

Docker’s networking subsystem is pluggable, using drivers. Several drivers exist by default, and provide core-networking functionality:

Note:-host networking is only available for swarm services on Docker 17.06 and higher.

Note:- none is not available for swarm services.

Which Network driver is suitable?

Most of the above network modes applies to all Docker installations. However, a few advanced features are only available to Docker EE customers.

Two features are only possible when using Docker EE and managing your Docker services using Universal Control Plane (UCP):

Services allow you to scale containers across multiple Docker daemons, which all work together as a swarm with multiple managers and workers. Each member of a swarm is a Docker daemon, and the daemons all communicate using the Docker API. A service allows you to define the desired state, such as the number of replicas of the service that must be available at any given time. By default, the service is load-balanced across all worker nodes. To the consumer, the Docker service appears to be a single application. Docker Engine supports swarm mode in Docker 1.12 and higher.

In Docker also high availability cluster is available and it is called Swarm. By using swarm you can use features like Scaling, Load balancer and you need your apps to be stateless, and for failover to happen automatically .Also many more features, detailed information from here

In Swarm, you can deploy your app to a number of nodes running on a number of Docker engines and these engines can be on different machines, or even in different data centers, or some in Azure and some in AWS. If any one of the nodes crashes or disconnects the other nodes automatically take over the load, and create a new node to replace the missing one.

Note:- This is a one of the important topic that tat you have to understand more details that can’t be explained though this post , I will share through an another post with examples .Even though you can fine more details on Docker Docs

Docker Underlying Technology

Docker is written in Go and takes advantage of several features of the Linux kernel to deliver its functionality.

Namespaces

Docker uses a technology called namespaces to provide the isolated workspace called the container. When you run a container, Docker creates a set of namespaces for that container.

These namespaces provide a layer of isolation. Each aspect of a container runs in a separate namespace and its access is limited to that namespace.

Docker Engine uses namespaces such as the following on Linux:

Control groups

Docker Engine on Linux also relies on another technology called control groups (cgroups). A cgroup limits an application to a specific set of resources. Control groups allow Docker Engine to share available hardware resources to containers and optionally enforce limits and constraints. For example, you can limit the memory available to a specific container.

Union file systems

Union file systems, or UnionFS, are file systems that operate by creating layers, making them very lightweight and fast. Docker Engine uses UnionFS to provide the building blocks for containers. Docker Engine can use multiple UnionFS variants, including AUFS, btrfs, vfs, and DeviceMapper.

Container Format

Docker Engine combines the namespaces, control groups, and UnionFS into a wrapper called a container format. The default container format is libcontainer. In the future, Docker may support other container formats by integrating with technologies such as BSD Jails or Solaris Zones.

Refer Docker Documentation to understand more

Also Watch Docker Training Videos

Suggested Posts

How Container Differ from Physical and Virtual Infrastructure